Improving the lake scheme within a coupled WRF-Lake model in the Laurentian Great Lakes

Abstract

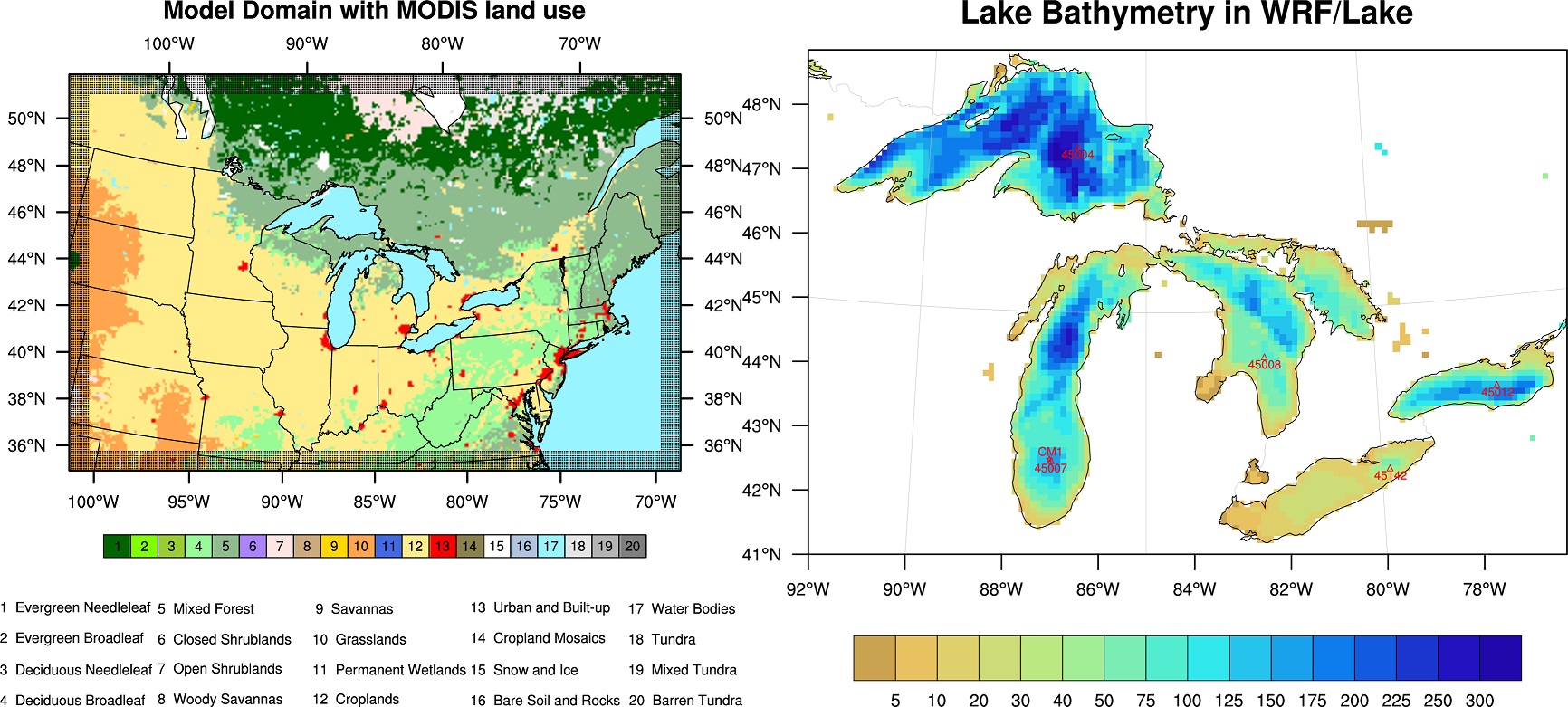

In this study, a one-dimensional (1-D) thermal diffusion lake model within the Weather Research and Forecasting (WRF) model was investigated for the Laurentian Great Lakes. In the default 10-layer lake model, the albedos of water and ice are specified with constant values, 0.08 and 0.6, respectively, ignoring shortwave partitioning and zenith angle, ice melting, and snow effect. Some modifications, including a dynamic lake surface albedo, tuned vertical diffusivities, and a sophisticated treatment of snow cover over lake ice, have been added to the lake model. A set of comparison experiments have been carried out to evaluate the performances of different lake schemes in the coupled WRF-lake modeling system. Results show that the 1-D lake model is able to capture the seasonal variability of lake surface temperature (LST) and lake ice coverage (LIC). However, it produces an early warming and quick cooling of LST in deep lakes, and excessive and early persistent LIC in all lakes. Increasing vertical diffusivity can reduce the bias in the 1-D lake but only in a limited way. After incorporating a sophisticated treatment of lake surface albedo, the new lake model produces a more reasonable LST and LIC than the default lake model, indicating that the processes of ice melting and snow accumulation are important to simulate lake ice in the Great Lakes. Even though substantial efforts have been devoted to improving the 1-D lake model, it still remains considerably challenging to adequately capture the full dynamics and thermodynamics in deep lakes.